Synthetic Data: How to Use LLM to Improve the Performance of LLM (WizardLM)

The rapid advancement of LLMs has been one of the most significant developments in AI in recent years. As these models grow in size, the demand for high quality training data, especially SFT data, has become increasingly critical. Large Language Models require vast amounts of diverse, high-quality data to achieve optimal performance. However, the acquisition of such data presents several challenges:

Data Scarcity: For many specialized domains or rare events, obtaining sufficient real-world data can be extremely difficult.

Data Privacy: The use of real-world data often raises significant privacy concerns, particularly when dealing with sensitive information.

Data Bias: Real-world datasets can inadvertently contain biases that may be amplified by the model during training.

Data Labeling: Manually labeling large datasets is time-consuming and expensive.

The Rise of Synthetic Data:

Synthetic data offers a promising solution to these challenges. By generating artificial data that mimics the properties of real-world data, researchers can create large, diverse datasets tailored to specific training needs. The WizardLM paper introduces a novel approach to synthetic data generation called Evol-Instruct, which demonstrates the powerful potential of this technique.

Key Benefits of Synthetic Data in LLM Training:

Scalability: Synthetic data can be generated in large quantities, meeting the data-hungry nature of LLMs.

Customization: Data can be tailored to specific tasks or domains, allowing for more focused model training.

Privacy Preservation: Synthetic data eliminates the need to use potentially sensitive real-world information.

Bias Control: Researchers can generate balanced datasets that help mitigate biases present in real-world data.

Cost-Effectiveness: Once the generation process is established, synthetic data can be produced more economically than collecting and labeling real-world data.

The Evol-Instruct Approach:

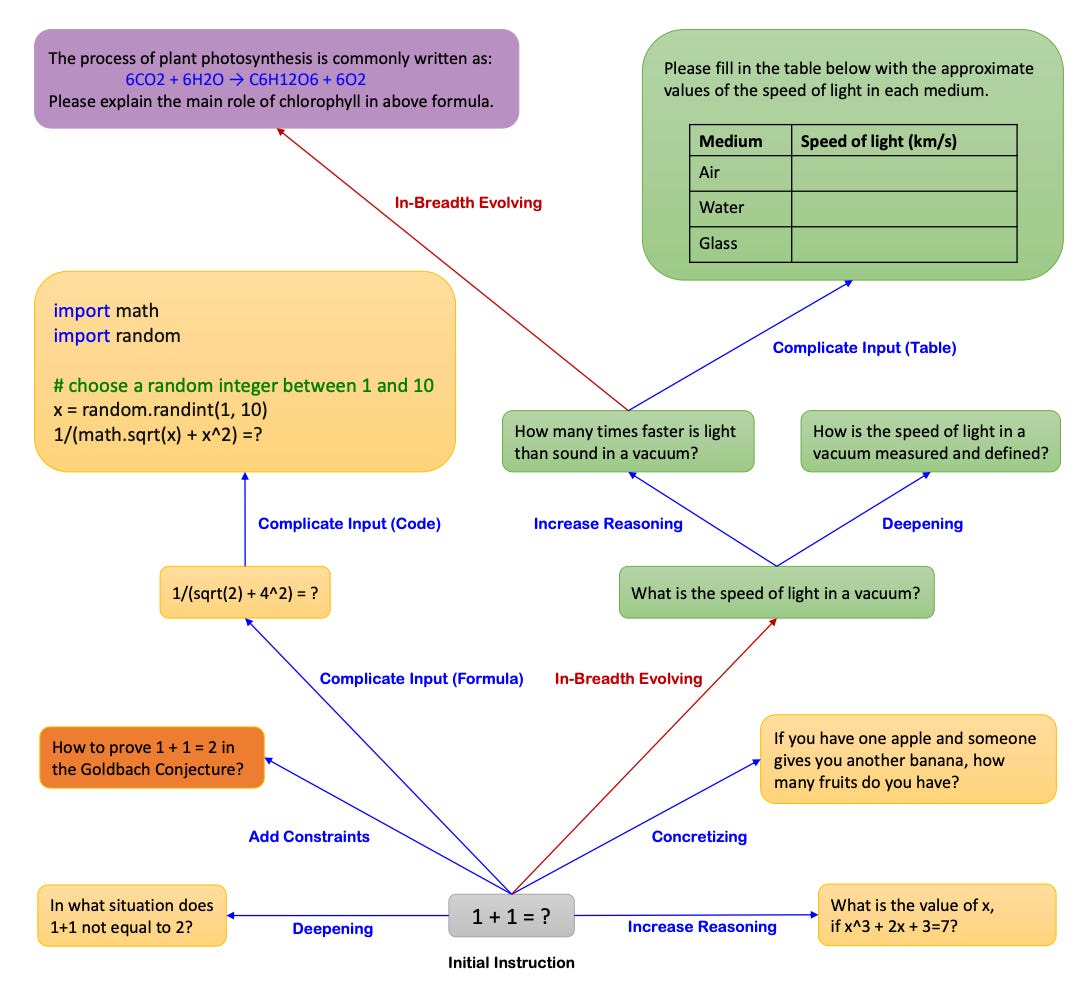

The WizardLM paper introduces Evol-Instruct, an innovative method for generating complex, open-domain instructions using AI. This approach addresses a critical limitation in current instruction-following datasets: the lack of high-complexity instructions. The WizardLM team was able to generated 250k instructions from 52k seed instructions. And the image below shows how one initial instruction evolved to more than 10 instructions

Let’s explore the Evol-Instruct process using a simple initial query: “1+1=?”

Evolutionary Process: Starting with simple instructions, the method uses AI to evolve them into more complex versions. This process involves two main types of evolution:

In-Depth Evolution: This type aims to increase the complexity and difficulty of existing instructions. It includes five subtypes:

Add Constraints: "In what situation does 1+1 not equal to 2?" This evolution adds a constraint by asking for exceptions to the rule.

Deepening: "How to prove 1 + 1 = 2 in the Goldbach Conjecture?" This evolution deepens the inquiry by connecting it to a complex mathematical concept.

Concretizing: "If you have one apple and someone gives you another banana, how many fruits do you have?" This evolution replaces the abstract numbers with concrete objects.

Increase Reasoning Steps: "What is the value of x, if x^3 + 2x + 3 = 7?" This evolution increases the number of steps required to solve the problem.

Complicate Input: "1/(sqrt(2) + 4^2) = ?" This evolution complicates the input by introducing more complex mathematical operations.

In-Breadth Evolution: "What is the speed of light in a vacuum?" This evolution generates a new instruction inspired by the concept of fundamental constants, broadening the topic coverage.

Diversity: The process generates a wide range of instruction types, covering various domains and difficulty levels. In our example, we see evolution from basic arithmetic to complex mathematics, physics, and even abstract reasoning. This is achieved by randomly selecting one of the six evolution prompts (five from in-depth and one from in-breadth) for each instruction in each round of evolution.

Quality Control: An instruction eliminator filters out low-quality or failed evolutions, ensuring the final dataset's high standard. The elimination process considers factors such as:

Whether the evolved instruction provides any information gain compared to the original. For instance, "1+1=2?" would be eliminated as it doesn't add complexity to the original query.

The ability of the LLM to generate a response to the evolved instruction. If the LLM struggles to answer "How to prove 1 + 1 = 2 in the Goldbach Conjecture?", this instruction might be eliminated.

The presence of copied words from the evolving prompt in the evolved instruction. An instruction like "Rewrite the prompt 1+1=?" would be eliminated as it copies the prompt structure.

This process is repeated for multiple rounds, with each round potentially increasing the complexity and diversity of the instructions. The result is a rich, varied dataset of instructions that can challenge and improve the capabilities of Large Language Models.

Result

The WizardLM study demonstrates the significant impact of training with synthetic data generated through Evol-Instruct. The authors benchmarked WizardLM v.s. other open source models, like Alpaca, Vincuna, as well as close source models ChatGPT.

Overall, on the Evol-Instruct testset, WizardLM outperformed Vincuna by 12.4% higher win rate, on human evaluation. And on Vicuna’s own testset, WizardLM still has a 3.8% higher win rate. In human evaluation, evaluators often preferred the outputs from WizardLM over those from other leading models, including ChatGPT.

This suggests that the synthetic data not only improved quantitative metrics, but also enhanced the quality of responses in ways that are noticeable to human users.

These results demonstrate that synthetic data generated by LLM can significant enhance the capabilities of LLMs, particularly in handling complex tasks. The improvements seen in WizardLM highlight the potential of using AI-evolved instructions to push the boundaries of what LLMs can achieve, potentially reducing the gap between open-source and commercial models.